States Propose Legislation to Combat AI Robocalls During Elections

New Hampshire Legislators Propose Law to Ban Deepfake Phone Calls Before Elections

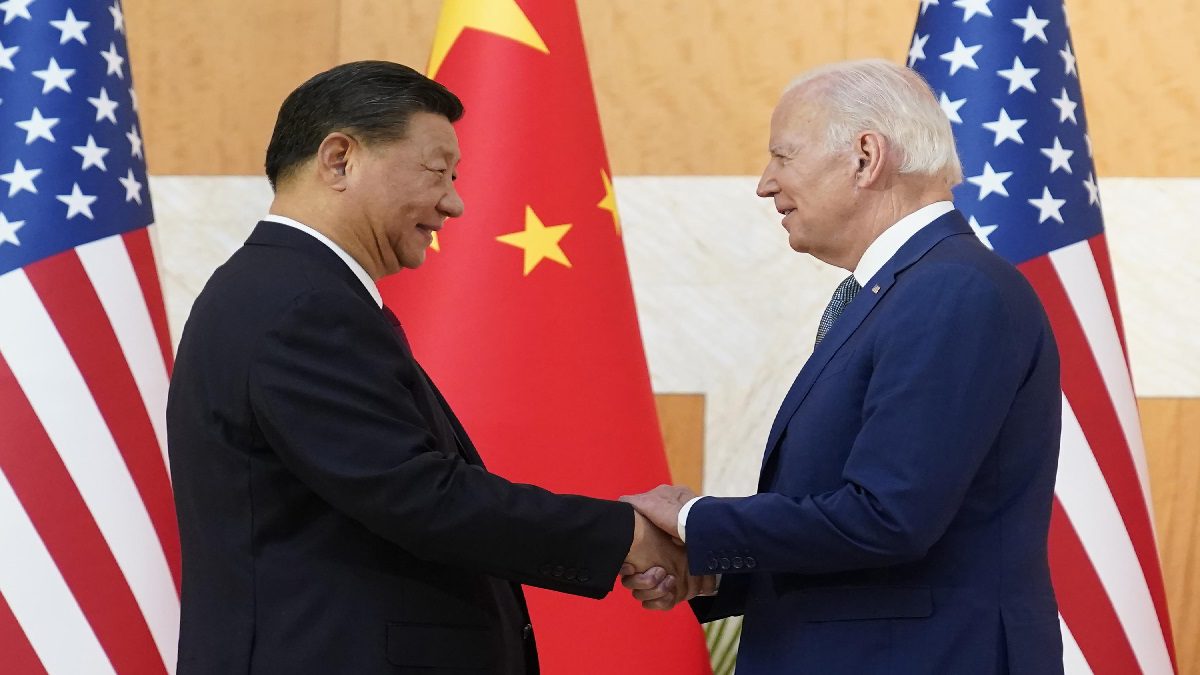

The rise of deepfake technology in political campaigns has sparked a wave of legislative action across the United States. In New Hampshire, a recent incident where more than 20,000 voters received a fake call from President Joe Biden has prompted lawmakers to propose a new law banning deepfake phone calls within 90 days of an election.

The proposed legislation, which has already passed the state House and is now heading to the Senate, would require any AI-generated calls to be accompanied by a disclosure that artificial intelligence was used. New Hampshire is just one of 39 states considering similar measures to ensure transparency in political communications.

Wisconsin has already signed a similar law into effect, imposing a $1,000 fine per violation for failure to comply. In Florida, legislation is pending that could result in criminal charges for undisclosed AI-enabled messages. Arizona is also weighing a bill that would require disclaimers 90 days before an election, with repeated violations potentially leading to felony charges.

On a federal level, bipartisan bills are in the works to ban the use of AI-generated material targeting candidates for federal office. Additionally, technology companies have pledged to take action against the misuse of AI in elections. A group of tech executives, including representatives from Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, and TikTok, have signed an accord to adopt “reasonable precautions” to prevent the spread of deceptive AI content.

The voluntary framework outlined in the accord includes methods for detecting and labeling deceptive AI content, as well as sharing best practices and responding swiftly to the spread of such content. With the support of tech companies and lawmakers, efforts are being made to combat the threat of AI-generated deepfakes in political campaigns.